Kayla Daughters & Dr. Cindy E. McCrea

Background

The goal of this project was to assess whether undergraduate teaching assistants grade student writing reliably when given a rubric and training.

Methods

Introduction to Psychology students (N=132 students) completed a series of weekly essays throughout the course of a semester.

Introduction to Psychology students (N=132 students) completed a series of weekly essays throughout the course of a semester.

- Interrater reliability was measured using the intraclass correlation in SPSS.

TA Grading Training

- A group of 11 teaching assistants (TAs) met weekly with a trainer (instructor) to calibrate grading.

- TAs were randomly assigned to grade student work independently. Grades were assigned according to a rubric that split up the assignment into three different sections: writing quality, engaging in class material and critical thinking. A Blackboard grading feature was used to blind TAs to each other’s grades.

TA grades of undergraduate student writing are reliable between well-trained graders. Agreement between graders is more substantial for objective elements(like use and comprehension of content) than for more subjective elements (critical thinking and writing).

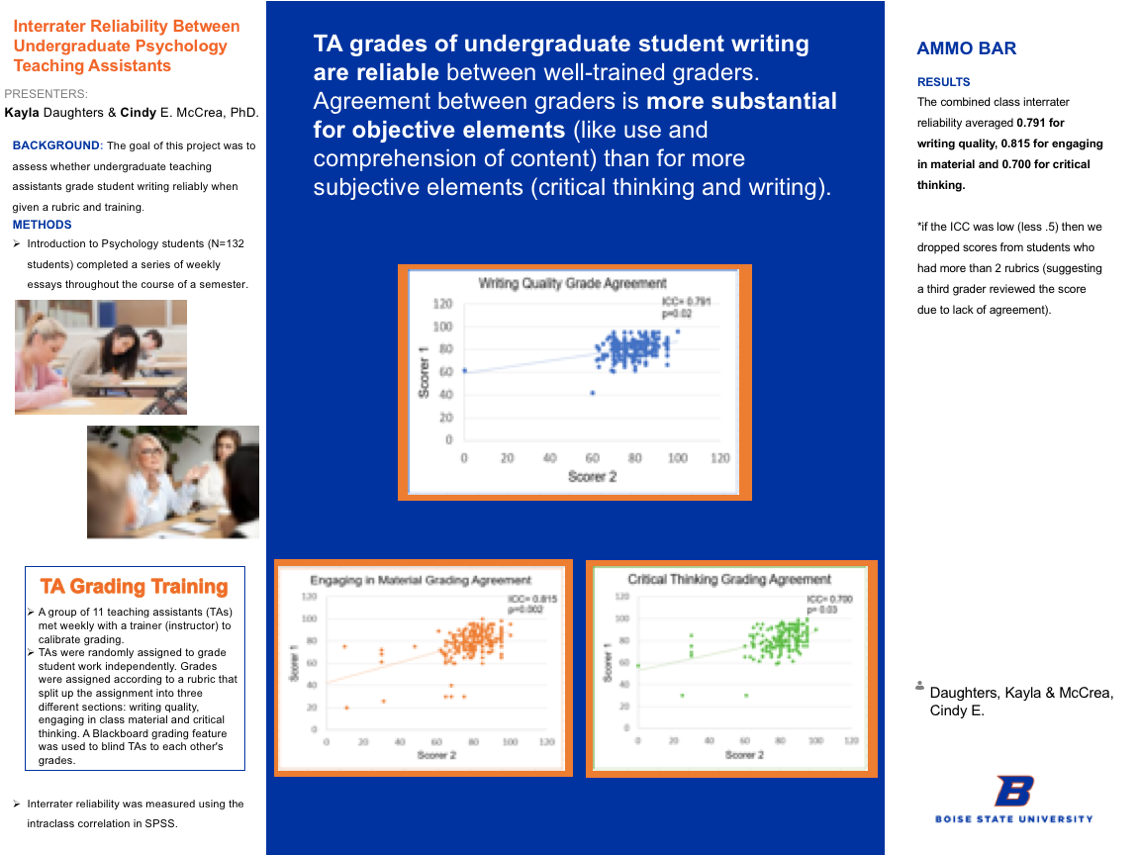

Critical Thinking Grading Agreement

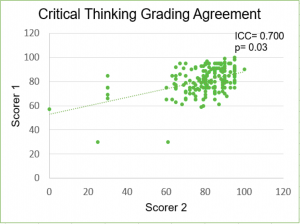

Engaging in Material Grading Agreement

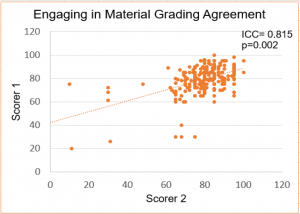

Writing Quality Grade Agreement

Results

The combined class interrater reliability averaged 0.791 for writing quality, 0.815 for engaging in material and 0.700 for critical thinking.

*if the ICC was low (less .5) then we dropped scores from students who had more than 2 rubrics (suggesting a third grader reviewed the score due to lack of agreement).

Additional Information

For questions or comments about this research, contact Kayla Daughters at kayladaughters@u.boisestate.edu.