You’ve heard of ChatGPT and other Large Language Models (LLMs) and you’re wondering what that means for education and technology in the present and future. Well, LLMs aren’t new at Boise State: they are part of some coursework and some of our researchers have been using LLMs in their research for a few years.

Below highlights recent events that continue the discussion about LLMs, and how LLMs have been part of curriculum and research.

Events

Boise State University hosted a panel discussion about how ChatGPT is affecting Education; the challenges and opportunities it introduces. The panel included representatives from multiple colleges including two deans, an expert on LLMs, and the director of the university Writing Center.

ChatGPT was the topic of the last Senior Seminar talk in Spring 2023 at the Department of Computer Science that was recorded. It is meant to be understandable by anyone even without a Computer Science background (though a bit of math is required!). Relatedly, the speaker also wrote a Medium article about his thoughts on how ChatGPT will affect education.

Curriculum & Clubs

The CS 436/536 Natural Language Processing (NLP) course covers many things ranging from probability and information theory, an introduction to linguistics, and neural networks (Kennington 2021). The course has a module on LLMs including understanding how the attention mechanism and transformer architectures work. Students gain practical experience with fine-tuning the LLMs and students usually opt for using LLMs on their final projects, enabling those students to be ready to help their future employers understand the nature of LLMs, their limitations, and how to use them effectively. Computer Science students can take the NLP course as an elective, and anyone else can take the course by completing some of the requirements in the Data Science for STEM Certificate.

Boise State University has many clubs. The AI Club is an active club with students from across campus who come together to talk and learn about AI. Some meetings have focused on learning about how to design AI applications, others have been visits to local companies that use AI in their products, while yet others have been about ongoing research. Some of these have included LLMs.

Some faculty and students have begun to meet twice a month to discuss recent research and papers on LLMs in a reading group.

Research

A team of researchers showed how a language model can be enriched with information that can tell if a text resource is educational or not, with particular interest if the resource is aimed at children (Allen et al. 2021). This was a result of the CAST project funded by NSF, led by Dr. Jerry Alan Fails in collaboration with Casey Kennington, Katherine Landau-Wright (College of Education) and Sole Pera (now at TU Delft).

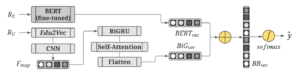

Dr. Casey Kennington leads the Speech, Language, and Interactive Machines (SLIM) Lab. They do research in Natural Language Processing, including a lot of work on language models. One issue with LLMs like ChatGPT is that they are only trained on text, but that’s different from how humans learn language. In a paper published in 2021, members of the SLIM Lab enriched a LLM with visual information from images which resulted in improvements on standard benchmarks (Kennington 2021).

Other work from the SLIM Lab includes more philosophical work that points out some of the issues with LLMs (Kennington and Natouf 2022). LLMs are powerful, but when they are only trained on text, they are missing important aspects of linguistic meaning (though more increasingly LLMs are being enriched with visual information). This paper argues for considering how many words are concrete (i.e., they refer to physical objects like chair) and many words are abstract (i.e., they are ideas defined by other words) and LLMs need to capture that kind of information. The ideas are extended and compared to how children learn language in another recent article. Another related article was published in the denotation.io blog on computational semantics.

Clayton Fields, a PhD candidate in the PhD in Computing Program at Boise State University (and a member of the SLIM Lab), is currently researching ways to make LLMs much smaller, yet retain their functionality. He led members of the SLIM Lab to participate in the “BabyLM” challenge, which they submitted a report paper to. He is also looking at how smaller language models can be enriched with information beyond text from the environment including visual and robotic states, and the model can learn while it is interacting. He wrote a recent article about visual language models that does a really nice job explaining the different ways visual information is incorporated into language models. Clayton’s project is funded by NSF.

Stay tuned because more is to come!