Idaho Literacy Intervention Program: Evaluation and Insights

Report Authors

Matthew May, PhD, Senior Research Associate

Mcallister Hall, Research Associate

Vanessa Crossgrove Fry, PhD, Interim Director

Recommended Citation:

May, M., Hall, M., & Crossgrove Fry, V. (2021). Idaho literacy intervention program evaluation. Idaho Policy Institute, Boise State University.

In 2020, the Idaho Legislature authorized an independent, external evaluation of the state’s literacy intervention program (Program) that will consider: (a) program design, (b) use of funds, including funding utilized for all-day kindergarten, (c) program effectiveness; and (d) an analysis of key performance indicators of student achievement, as well as any other relevant matters. For the third year, Idaho Policy Institute (IPI) was contracted to conduct the evaluation.

Performance data traditionally used in this evaluation is unavailable because of the COVID-19 pandemic. As a result, IPI administered online surveys to teachers (n=494) and administrators (n=101) and conducted in-depth interviews with teachers (n=11) to understand the function and perceptions of the Program across the state. This report also includes data from the 2019/20 (LEA) literacy plans, budgets, and expenditure data.

Key Findings

Program Design

Teachers surveyed are moderately confident in the IRI by Istation’s ability to accurately identify student performance. Any lack of confidence may be attributable to issues associated with test structure. This can include student unfamiliarity with technology, poor quality of test audio, and timed questions.

Use of Funds

On average, LEAs use a majority of their funds each year to hire more or increase pay of current personnel. The most common personnel employed with literacy funds are support staff who help lead intervention groups and provide more opportunities for small group or one-on-one instruction. Administrators indicated that if their LEA were to receive more literacy funds, they would increase funds dedicated toward personnel.

Program Effectiveness

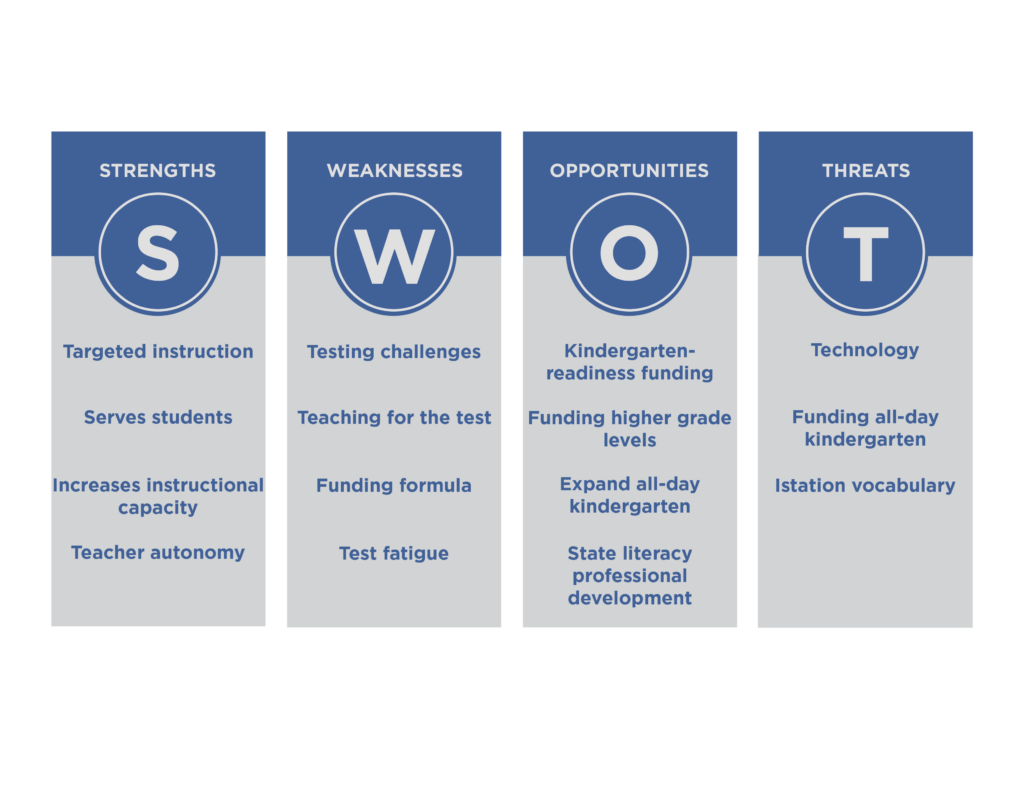

Survey and interview data were used to conduct an analysis of the Program’s strengths, weaknesses, opportunities, and threats (SWOT). Strengths reflect positive impact of the Program and opportunities identify potential growth for the Program.

Strengths include the ability to target specific learning gaps among students. As a result, students receive instruction designed for their growth. Additionally, Program funding is being used for items that can be traced directly to the students

Opportunities identified include allowing the funds to be directed toward kindergarten readiness education. This would require a change in state statute to include non-school age children as funding eligible. Funds could also be allocated to students in grades 4-6 who are performing at a K-3 level. Some respondents indicated that a statewide literacy professional development opportunity would also enhance the Program. Additional Program funding could allow all students the opportunity to attend all-day kindergarten.

All-Day Kindergarten

According to survey data, 37% of LEAs surveyed in the state are using at least some of their state literacy funds toward a version of a free all-day kindergarten program. Examples of the structures discussed in interviews are provided in Table A.

Table A: All-Day Kindergarten Types

| Type | Staff | Access | Prioritization |

|---|---|---|---|

| Part-time Individual needs-based | All-day with teacher (alternating) | Limited | Students scoring 2 attend all-day two days a week, students scoring a 3 attend all day four days a week |

| Individual needs-based | Half-day with teacher/ Half-day with paraeducator | Limited | Students scoring lowest on kindergarten screening |

| School needs-based | Half-day with teacher/ Half-day with paraeducator | Open | All kindergarten students attend |

| Free kindergarten | All-day with teacher | Open | All kindergarten students attend |

| Fee-based* | All-day with teacher | Limited | First-come, first-serve |

*Charging fees/tuition is not allowed per state statute, but it still occurs..

Covid-19 Impact

COVID-19 continues to impact instruction. Many teachers have had to change their instruction this year to include more review. As a result, practice time, engaging activities, and content depth are sacrificed. Teachers are optimistic that students will produce average IRI scores in the Spring. Teachers also report providing multiple versions of support for students needing literacy intervention during virtual learning sessions.

In 1999, the National Reading Panel was convened by the United States Congress. The 14 member panel reviewed over 100,000 studies on how children learn to read, attempting to determine the most effective evidence-based methods for teaching reading. A major finding was that early reading acquisition depends on the understanding of the connection between sounds and letters. These findings prompted broad scale incorporation of policies across the states.

That same year, indicating continuing recognition of the critical importance of reading skills, Idaho passed the Idaho Comprehensive Literacy Act. The legislation associated with this act sought to mandate regular assessments of kindergarten to third grade (K-3) students (and make school-level assessment data available to stakeholders), provide intervention for students not meeting grade-level reading proficiency and implement associated professional development for teachers and administrators.

The original legislation has morphed over time, with the most substantive updates in response to the outcomes of the 2015 Comprehensive Literacy Plan. One of the updates, implemented in 2016 by legislative statute, established the new Literacy Intervention Program (Program), the focus of this report. The Program is now in its fifth year.

In 2020, the Idaho Legislature authorized an independent, external evaluation of the state’s literacy intervention program that will consider: (a) program design, (b) use of funds, including funding utilized for all-day kindergarten, (c) program effectiveness; and (d) an analysis of key performance indicators of student achievement, as well as any other relevant matters.

In addition to collecting new original data, Idaho Policy Institute (IPI) collaborated with Idaho State Board of Education (OSBE) and State Department of Education (SDE) staffs to identify and obtain secondary data related to this evaluation. The five major sources of data analyzed in this report are:

- An online survey of K-3 teachers in Idaho (n=494)

- A series of in-depth interviews with K-3 teachers from the various Education Regions in Idaho held over the Zoom teleconferencing platform (n=11)

- Individual Local Educational Agency (LEA) Literacy Intervention Plans (Plans)

- An online survey of administrators who oversee the literacy intervention program within their LEA (n=101)

- LEA Literacy Intervention Expenditure Reports

The Plans’ data was combined with Expenditure information to create a dataset indicating each LEA’s impacted population, budget, and expenditures. This information is reported at the state-level and used to identify patterns according to different funding categories. Further details about the methodology can be reviewed in Appendix A.

Scope and Limitations

This report is the third evaluation of the Program that IPI has completed in as many years. IPI’s two prior reports included extensive analysis of student performance on Idaho Reading Indicator (IRI) assessments. Due to the COVID-19 pandemic and necessary transitions to virtual learning and/or shortened academic years, the Spring IRI assessment was not administered statewide in 2020. With this data unavailable, there is no way to reliably assess student performance in the 2019/20 school year using the IRI.

In order to still provide policy-makers with valuable information, this limitation has prompted a more qualitative structure to this evaluation than previous years. Analysis is primarily based on survey responses from a) K-3 teachers in Idaho regarding their experience teaching literacy and using the new IRI by Istation, and b) LEA administrators regarding their experience implementing the Program and its funding within their LEA. The inclusion of both teacher and administrator perceptions provides important context for the Program that has not been included in either of IPI’s prior evaluations.

Some limitations on interpreting program performance noted last year bear repeating. While the 2019/20 school year technically represents the fourth year of the Literacy Intervention Program’s existence, due to the complete overhaul of the IRI testing instrument and testing process in year three*, it is better contextualized as the second year of an entirely new intervention, although the unavailable data will compromise future evaluation of the Program.

*The legacy IRI testing procedure was a one-on-one assessment between the proctor and student. It was approximately one minute in length and measured only a single aspect of literacy, reading fluency. The new IRI from Istation is a computer-adaptive assessment taken on a tablet or computer that can last approximately 30-45 minutes. It measures five foundational skills of literacy, including; alphabetic knowledge, phonemic awareness, vocabulary, spelling, and comprehension.

Program Design

To evaluate the Program’s design, this section focuses on the following three distinct design elements and incorporates survey and interview responses to these areas.

- How well the Program identifies students in need of literacy intervention.

- How well the Program supports progress monitoring students within it.

- The degree to which individual plans facilitate the goals of the program.

These specific perceptions have not been included in prior years’ reports as they were quantitatively focused while this year is more qualitatively focused. It is important to note that qualitative data is best paired with quantitative data to produce a more comprehensive and accurate picture of Program design.

Identifying Students

The Program’s funding formula focuses on the number of students scoring basic and below basic on the IRI by Istation. When it comes to confidence in the assessment (Table 1), almost half of administrators indicated they are very confident that the IRI accurately identifies students in their LEA performing at each level. However, teachers are not as confident in IRI ability to identify student performance levels. Both interview and survey respondents admitted to using and preferring at least one secondary evaluation instrument to confirm student literacy levels.

Table 1: Teacher and Administrator Confidence in IRI Identification of Student Performance

| Proficient | Basic | Below Basic | |

|---|---|---|---|

| Administrator | |||

| Very confident | 53% | 45% | 50% |

| Moderately confident | 46% | 51% | 49% |

| Not confident | 1% | 2% | 1% |

| Unsure | 0% | 1% | 0% |

| Teachers | |||

| Very confident | 29% | 24% | 34% |

| Moderately confident | 59% | 57% | 46% |

| Not confident | 11% | 16% | 18% |

| Unsure | 1% | 2% | 2% |

Interview responses imply teachers are reluctant to fully accept IRI by Istation scores because of limitations inherent in the style of the test. The audio, which is integral to the test, is not always easily understood because of volume, a strange accent making sounds unfamiliar, and speed. Students are not able to listen to a sound or instructions more than once because of the short time allotted for answering each question. These issues had been previously identified by the state and SDE has worked with Istation to produce re-recordings of the audio. These changes are anticipated to be introduced with the Spring 2021 test. Future evaluation may show improved perceptions as a result of this change.

Even when the audio does not cause a problem, teachers report that students are often unable to process a question before being timed out and given the next question. Teachers fear this can discourage students and cause test anxiety, impacting test performance. The test is designed with time limits for students to measure automaticity of knowledge, an important aspect of literacy development. Some respondents recognized the importance of this measure and expressed a desire for questions to remain timed, but without a limit. This would allow teachers to view how quickly students answered a question and if students know the answer regardless. SDE has indicated it is already in communication with Istation to determine appropriate and possible solutions.

Technology can also decrease IRI accuracy. Not all students are familiar with the technology used to administer the assessment. All interview participants expressed improved performance when kindergarten and first grade students use a touch screen instead of a mouse or track pad. Some described that young students do not have the dexterity to complete some of the questions. For example, not all students can easily drag and drop options into categories regardless of device. These needed technological skills caused one interview respondent to “feel that the scores show how well they’re taking the test, not what they know.”

Progress Monitoring

In addition to hosting the IRI, Istation offers supplemental materials, including a progress monitoring tool and lesson plans targeting specific skills. The progress monitoring tool is commonly used in LEAs across the state; 89% of teachers reported using progress monitoring and many interview participants reported administering progress monitoring on a regular basis. Progress monitoring is available to all LEAs at no cost but is not required as part of the Program design. The impact of the progress monitoring tests on Fall and Spring test performance is being measured in a separate non-IPI study. Many teachers reported using monitoring instruments other than Istation to measure student progress as well, the impact of these, including in comparison with Istation progress monitoring, is unknown.

Literacy Plans

LEAs are required to create and send literacy plans to the OSBE each year per state statute. LEAs are also expected to share those literacy plans with their staff. Survey data asked teachers if they received their LEA literacy plan in the 2019/20 school year and if they reviewed it (Table 2).

Similarly, administrators were asked if literacy plans are shared with teachers. Roughly 57% indicated that they do.

Table 2: Teachers who received their literacy plan compared to teachers who reviewed their literacy plans

| Received Literacy Plan | Reviewed Literacy Plan | |||

|---|---|---|---|---|

| No | Unsure | Yes | Total | |

| No | 15% | <1% | 1% | 16% |

| Unsure | 7% | 25% | 1% | 33% |

| Yes | 3% | <1% | 47% | 51% |

| Total | 25% | 26% | 49% | 100% |

Use of Funds

Expense Categories

LEAs are required to submit an expense report of the past year’s Program expenditures at the end of each school year. In 2017/18, expenditures were broken down into four major categories: Personnel, Curriculum, [Student] Transportation, and Other. From 2018/19 on, two additional expense categories were added: Professional Development and Technology.

IPI’s analysis was limited to LEAs for which both Literacy Intervention Plan budgets and end of year expense reports were available. As such, average expense results are for 147 LEAs in 2017/18, 149 LEAs in 2018/19, and 157 LEAs in 2019/20.

IPI analyzed the proportion of annual LEA expenditures in each funding category. Table 3 summarizes expense categories averaged across all LEAs. In 2019/20, the average LEA spent 72% of Program funding on personnel, 17% of their funding on curriculum, 8% on technology, 2% on professional development, 1% on other expenses, and 0.2% on student transportation.

Table 3: Average Proportion of Expense Report Budget Categories (Literacy Program funding only)

Table 3: Average Proportion of Expense Report Budget Categories (Literacy Program Funding Only)

| Expense Category | 2017/18 | 2018/19 | 2019/20 |

|---|---|---|---|

| Personnel | 71.0% | 69.0% | 72.1% |

| Curriculum | 21.0% | 14.7% | 17.2% |

| Transportation | 0.8% | 0.5% | 0.2% |

| Professional Development | * | 2.4% | 1.5% |

| Technology | * | 10.4% | 7.9% |

| Other | 7.3% | 2.7% | 1.2% |

*Note: Professional Development and Technology expense categories were not present on 2017/18 expense reports. As such, percentages cannot be reported in those categories

On average, distribution of expenses across categories has been stable over time, with one or two exceptions (and accounting for the fluctuation associated with additional expense categorization).

Personnel costs have remained stable at approximately 70% of Program expenditures. Survey data reveals that 27% of LEAs use literacy funds to either hire new personnel or increase the pay of current personnel who are taking on new roles. The most common use is hiring support staff to assist teachers and increase small group learning experiences. However, some LEAs hire reading coaches and literacy specialists to work with high need students and provide training and support to teachers. A small number of LEAs are able to hire an additional teacher using literacy funds and consequently decrease class sizes. Almost all administrators (89%) indicated they would dedicate literacy funds toward paying personnel if their funding amount increased.

Curriculum costs have fluctuated over the years. This is likely due to some curriculum costs being up front and decreasing over time as one-time expenses in early years of the Program which would not need to be repeated annually. Most surveyed administrators who reported recent spending on curriculum used funds to purchase virtual curriculums.

Student transportation expenses have consistently remained the smallest funding category, reflecting that few LEAs expend funds on travel relative to the Program—less than 1% each year and declining. Transportation funding being capped at $100 per student is likely another factor keeping its proportion of expenses small.

With professional development only reported as a discrete category in two years’ data, it is difficult to identify a trend, although reported costs have remained in the 2% range. Only 10% of administrators in the survey indicated using state literacy funds for professional development. However, 48% would prioritize professional development if their LEA received more literacy funding. Of the teachers who receive literacy-focused professional development, 88% report using strategies learned from those experiences on at least a weekly basis. It should be noted that funding for literacy-focused professional development exists outside of the Program, which may explain why it constitutes a lower share of overall Program expenses.

Technology likewise only has two years being reported as a discrete category and has generally accounted for 10% of literacy program expenses. Some interview respondents indicated that their school had bought touchscreen computers or tablets to help students take the IRI easily, as younger students are generally unfamiliar with a mouse or trackpad.

The ‘other’ funding category has been declining since 2017/18, following the addition of the professional development and technology categorizations. In the 2019/20 school year, it accounted for an average 1% of LEA program expenditures.

All-Day Kindergarten

According to survey data, 37% of LEAs surveyed in the state are using at least some of their state literacy funds toward a version of a free all-day kindergarten program. Out of these LEAs, only three are able to fully fund their all-day kindergarten program with literacy funds. The structure of all-day kindergarten programs varies across schools. Examples of the structures discussed in interviews are provided in Table 4.

Table 4: All-Day Kindergarten Types

| Type | Staff | Access | Prioritization |

|---|---|---|---|

| Part-time Individual needs-based | All-day with teacher (alternating) | Limited | Students scoring 2 attend all-day two days a week, students scoring a 3 attend all day four days a week |

| Individual needs-based | Half-day with teacher/ Half day with paraeducator | Limited | Students scoring lowest on kindergarten screening |

| School needs-based | Half-day with teacher/ Half-day with paraeducator | Open | All kindergarten students attend |

| Free kindergarten | All-day with teacher | Open | All kindergarten students attend |

| Fee-based* | All-day with teacher | Limited | First-come, first-serve |

*Charging fees/tuition is not allowed per state statute, but it still occurs…

Budget to Expense Comparison

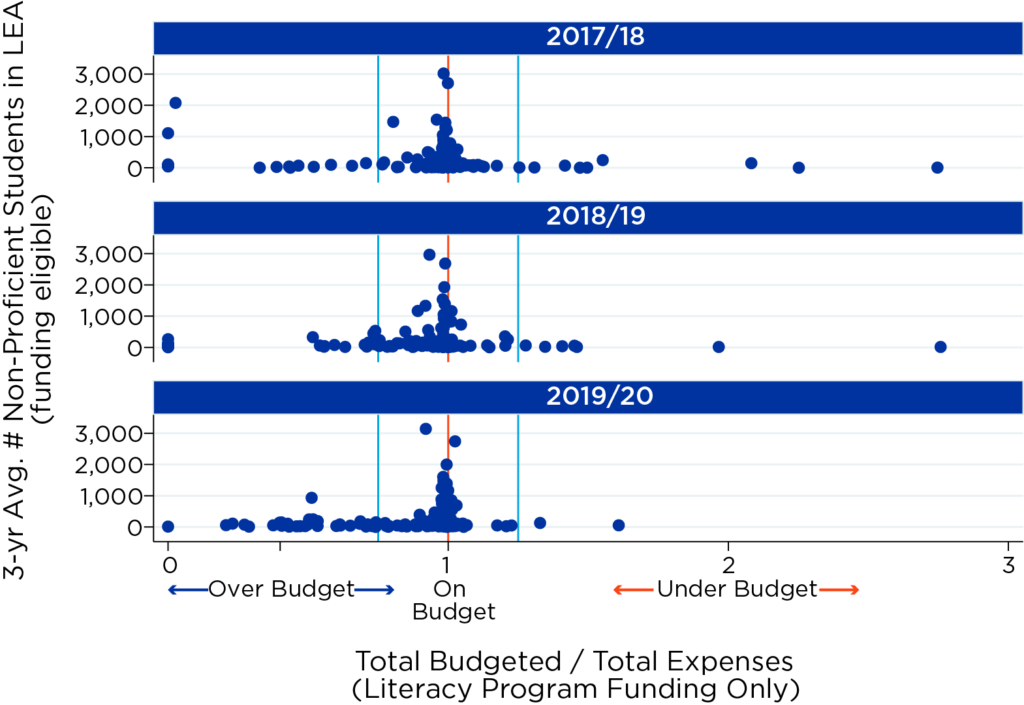

Comparing budgeted dollars to actual expenses is a relative indicator of Program efficiency year-to-year. As in prior reports, IPI compared the start-of-year Plan budgets to the end-of-year expense reports to produce a measure indicating how accurately the budgets anticipated costs. We classify LEAs “near budget” if expenses are within +/- 25% of anticipated costs. If expenses are greater than +/- 25% of budgeted costs, they are either classified “over budget” (if actual expenses are greater) or “under budget” (if actual expenses are less). This allows us to track financial efficiency of Program budgeting over time. Table 5 and Figure 1 summarize LEA performance over the last three years of the Program.

In the 2017/18 school year, 82% of LEAs were near their projected budget (the outer bounds of which are represented by blue lines on the graph—the orange line represents the point where budgets and expenses perfectly match). About 12% of LEAs were over budget, while 6% were under budget.

Table 5: Budget to Expense Report Comparison

| Budget Accuracy | 2017/18 | 2018/19 | 2019/20 |

|---|---|---|---|

| Over | 12.2% | 16.3% | 22.5% |

| Near | 81.6% | 78.5% | 76.2% |

| Under | 6.1% | 5.2% | 1.3% |

Figure 1: Budget to Expense Report Comparison

In the 2018/19 school year, the proportion of LEAs near budget declined to 79%, a change of three percentage points. Those over budget increased to 16%, while those under budget decreased to 5%.

In the 2019/20 school year, the proportion of LEAs near budget continued to decline an additional two points to 76%. Conversely, the proportion of LEAs under budget declined four points to 1%, while those over budget increased six points to 23%. Given the end of the 2019/20 school year included the start of the COVID-19 pandemic, when numerous schools had to contend with transitions to virtual and distance instruction, this was unexpected and, therefore, not budgeted.

Some of the extreme cases are also likely due to the budgets of small schools being far more susceptible to small changes in expenses, which would constitute a greater percentage of their initial budget. As seen in Figure 1, most of the LEAs that are over budget in 2019/20 are smaller LEAs with fewer than 1,000 non-proficient students on average.

Additional data points from subsequent years will help improve Program implementation, as it will allow the State to identify LEAs that could benefit from additional financial planning resources.

Program Effectiveness

In past reports, this section focused on the program’s effectiveness in raising IRI scores. As no Spring IRI was administered in 2019/20, this report instead focuses on the effectiveness of the Program’s structure and processes. Towards that end, survey and interview data were used to create a SWOT analysis.

SWOT analyses are commonly used in the business sector to help organizations understand and prioritize the internal strengths and weaknesses, as well as the external opportunities and threats, to a program. In this analysis, internal factors exist within the structure of the current Program and external factors exist outside the boundaries of the Program. Weaknesses and threats should be treated as areas needing support or restructuring rather than programmatic failures.

Strengths

Targeted Instruction

The IRI by Istation allows teachers to view student performance on each question. Teachers surveyed and interviewed reported using student data to identify learning gaps, differentiate learning, create intervention groups, and plan lessons. Targeting instruction toward specific weaknesses ensures that students are receiving the help they need.

Serves Students

Funding is being used for items that can generally be traced directly to the students. Students get to learn from and use curriculum materials in intervention groups. Students use purchased technology and software programs to access literacy training programs and increase test taking ability. Increased personnel allow for increased small group and one-on-one instruction to individualize student learning. Professional development provides teachers with literacy-focused teaching strategies that are used within the classroom regularly.

Increases Instructional Capacity

Many schools use Program funding to create new positions at the school requiring varying levels of education, providing opportunities for students to receive individualized instruction more often.

Teacher Autonomy

IRI scores provide insights on what needs to be taught, but the program allows teachers flexibility in how to teach literacy. The survey included 407 unique combinations of over 30 literacy instruction strategies. Interview respondents from the same LEAs and even the same schools had different methods for improving student literacy. Teacher autonomy allows for teachers to research and apply best practices, as well as make changes when current strategies are not improving literacy.

Weaknesses

Testing Challenges

As stated earlier in this report, teachers are not always confident IRI scores accurately identify high-need students because of issues inherent to the IRI by Istation. These issues include low-quality audio features, short time frames allotted for answering, and student inability to use/unfamiliarity with technology. As a result, teachers often use secondary evaluation tools to identify student knowledge. However, SDE is aware of test challenges and is currently in communication with Istation to find solutions. SDE indicated Istation has been very responsive and willing to make improvements in a timely manner.

Teaching for the Test

Teachers interviewed and several survey respondents discussed struggling to focus on the main goal of increasing student literacy. Instead, teachers often focus on specific aspects of the test they know their students will struggle with because so much of the Program is focused on student IRI scores. This includes taking time to teach students test-taking skills, training for how to complete different sections of the test and identifying specific vocabulary words rather than focusing on the standards measured on the test.

Funding Formula

The current funding formula is not always beneficial to small or midsize schools. When schools only receive funding for a few students across several grades, the funds they receive are often insufficient to create a meaningful intervention.

Test Fatigue

Teachers in the interviews also thought students would perform better and benefit from being able to take the test one section at a time instead of completing the entire test in a single sitting. These respondents stated that students have a hard time focusing, sustaining energy, and giving effort throughout the entire test.

Opportunities

Kindergarten Readiness Funding

Administrators and interviewed teachers expressed the desire to use state literacy funds for kindergarten-readiness learning experiences in their LEA. Many students come into kindergarten performing low and their performance stays low. Instruction for below school age children has proven to help students come into kindergarten at more equal learning levels, allowing for more opportunities for success. The current statute would have to be changed to allow funds to be used for non-school aged children.

Funding More Grades

Administrators in the survey indicated that some grade 4-6 students in their schools are at a K-3 reading level and being able to use literacy funds to help these students would be beneficial to student and schoolwide success.

Expand All-Day Kindergarten

According to survey data, 37% of LEAs in the state are using at least some of their state literacy funds toward a version of a free all-day kindergarten program. Four administrators specified that if they were to receive more literacy funding, they would use it to increase access to their all-day kindergarten program. Two respondents expressed the desire to have more funds so they could start funding all-day kindergarten programs. More funds to schools seeking to increase student access to all-day kindergarten would allow students to receive more time in the classroom improving literacy.

State Literacy Professional Development

Professional development opportunities make up, on average, 1-2% of total literacy fund spending. Only 10% of administrators surveyed indicated using state literacy funds for professional development. However, 48% would prioritize professional development if their LEA received more literacy funding. Almost all surveyed teachers (87%) reported receiving literacy-specific training. The type of professional development experiences varied and while some were required, others teachers voluntarily participated in it. A few teachers expressed a desire to have a statewide required literacy professional development opportunity to create a more unified approach to literacy statewide. This could be done with Program funds, however there are professional development funding opportunities available in both Statewide and LEA budgets, which LEAs may use instead in order to direct Program funding to other areas.

Threats

Technology and Instrumentation

Teachers interviewed and surveyed consistently brought up the struggle of needing to take time to teach their students familiarity with technology, even though technology is not necessary for literacy.

In addition, teachers stated that kindergarten and first grade students are not dexterous enough with a mouse or a track pad to complete the many tasks in the IRI and that they need to complete the test with a touch screen. Schools often need to buy more or better technology for students, sometimes using literacy funding which could be used to improve literacy rather than for devices to better measure literacy.

Funding All-Day Kindergarten

The need and desire of all-day kindergarten programs for all students was expressed by teachers and administrators across the state.

According to interview participants, time is restricted in half-day kindergarten classes and teachers spend most instructional time teaching literacy, preparing students for testing, and administering the IRI and progress monitoring tests. As a result, instructional time for other subjects, such as math and science, is reduced. This implies that all-day kindergarten is a solution for general education purposes, not only literacy. Funding all-day kindergarten with general education funds may facilitate more literacy funds being targeted directly toward high-need students’ literacy needs.

Istation Vocabulary

Survey respondents frequently indicated deciding to focus more on vocabulary after reviewing Istation scores. Similarly, when asked what they would change about the IRI, almost all interview participants said they would change the vocabulary section. Overall, teachers expressed frustration over this section, claiming that it brings down overall scores and is difficult to provide proper instruction for improvement.

Multiple participants feel that the Istation vocabulary words are regionally specific and that students often come across words that they reasonably may not have heard before. Some participants discussed that the vocabulary section has questions asking students to select the picture to complete a sentence and that the pictures are often confusing. For example, the student needs to select a picture meaning “land” and all students select a picture of a helicopter landing rather than a picture of scenery.

Since Istation is a national program, the state has limited control over the vocabulary selected for the test, yet this problem poses a threat to the test performance of students across the state.

The impacts of COVID-19 related school closures are likely to be seen in future evaluations. The survey included questions about teacher perceptions of student performance, changes made to regular instruction, and the process of providing virtual interventions to high-need students. This information is provided here and could be considered for inclusion in future evaluations to help contextualize scores.

Performance

Teachers were asked how their students performed on the fall 2020 IRI and how they feel students will perform in the coming spring IRI compared to previous years (Table 6).

Teachers are noticing differences in students this year in addition to obvious knowledge gaps caused by school closures and precarious instruction at the end of the last school year. Some teachers have reported that students are lacking in educational stamina and have social and behavioral learning gaps that are impacting their ability to learn and progress.

Table 6: Teacher Perception of Future Student IRI Performance

| Expectation | Fall 2020 | Spring 2021 |

|---|---|---|

| Better | 13% | 12% |

| The same | 35% | 18% |

| Worse | 48% | 18% |

| Too early to know | N/A | 50% |

| N/A | 3% | 2% |

Although schools are not closed this year, the continuing pandemic is impacting student learning. Many LEAs are providing instruction in a hybrid format, with students alternating between in-person and virtual learning. Some schools are allowing students to attend completely virtually while their peers are physically in school. In some cases, teachers are expected to teach both sets of students concurrently. Teachers are aware that their in-person instruction may be moved to complete virtual learning if enough students or teachers have been exposed to the disease.

Instruction

In response to these conditions, 80% of respondents reported needing to adjust their usual instruction patterns. To address learning gaps, many teachers began the school year teaching content students would have learned in the previous grade and reteaching foundational skills. Teachers are also teaching current required curriculum, pacing their curriculum slower to account for student stamina, and simplifying student expectations. Another strategy is to increase the amount of small group work and differentiated learning to their instruction to specifically account for the large range of student abilities in the classroom. This allows full group instruction to remain similar, while still ensuring individual students are closing learning gaps at their own pace.

Teachers have had to adjust their curriculum to account for time lost. The most common response is prioritizing curriculum and eliminating content depth. The second most common response is to focus on instruction and dedicating less time to practice and engaging projects. Some teachers have increased homework assignments to provide students with more practice and review opportunities.

Respondents teaching students in-person on alternating days described attempting to complete all necessary instruction in-person and having online learning days dedicated to practice. This makes in-person instruction content heavy and requires student concentration. Many teachers, both those teaching hybrid and all in-person classes, reported increasing digital learning within the classroom. These teachers feel the need to prepare students for virtual learning in the event virtual learning becomes necessary again.

Virtual Literacy Interventions

Online and hybrid-format teachers provided insight into virtual intervention processes. Most teachers providing all online instruction have intervention groups and meet with them online. These groups may be led by a support staff member or the teacher themselves. A few teachers reported meeting virtually with students individually as well. A common challenge reported by these teachers is the inability to guarantee a student will attend the scheduled meeting.

Teachers teaching in a hybrid-format tend to have students who require intervention complete online or physical assignments on virtual learning days as supplements to in-person interventions. Some LEAs have intervention students come into the school to receive small group instruction on virtual instruction days.

This report is IPI’s third evaluation of Idaho’s Literacy Intervention Program. While past reports have focused almost exclusively on a quantitative analysis of student-level IRI data, this report sought to integrate a more qualitative approach to provide a more rounded perspective of the Program. This includes the perspective of both teachers within the classroom and administrators overseeing their LEA’s plan.

IPI’s analysis of program design finds that it is generally perceived that the current IRI test does a fair job identifying students in need of literacy intervention, but many teachers prefer to pair it with additional forms of assessment, such as in-class observations, in order to ensure that students are accurately assessed. Those that administer Istation’s optional progress monitoring tests find that it is helpful, but other LEAs prefer alternate monitoring instruments.

Approximately half of teachers reported receiving and reviewing their LEA’s Literacy Intervention Plan. One administrator noted that while they did not forward the full plan to teachers, the areas they were responsible for were communicated to them. Increased communication may be one area for improvement.

In terms of the Program’s use of funds, expense categories are generally stable over time, with personnel accounting for the largest proportion of state literacy fund use. Many administrators indicated they would increase personnel spending if given more financial resources, in the form of hiring more aides, thereby increasing hands-on time between teachers and students. There is also an opportunity for increased professional development on literacy, as teachers identified it as a need while administrators expressed a willingness to increase with added resources.

As it relates to all-day kindergarten, use of Program funds is inconsistent across the state. Few LEAs use the Program to fully fund an all-day kindergarten, although several use it to partially fund it. There is concern that, given its well-documented benefits to students across academic research, treating all-day kindergarten as a literacy-only issue rather than a general education issue might prevent literacy-specific resources from being used to more directly address literacy problems.

An analysis of the program effectiveness suggests several Program strengths and potential opportunities. In particular, the Program facilitates targeted instruction using individual student data to both identify learning gaps and allow teachers to differentiate their instruction. This helps ensure that the students who need the most help receive the most attention. Additionally, the Program does a good job of serving students by directing state funding to areas that can help them.

Several opportunities exist to help increase program effectiveness, though. Several respondents expressed a desire to use Program funds to offer kindergarten readiness instruction. This approach is viewed as a way to help ensure that all students enter kindergarten on an equal learning level, allowing teachers to better prioritize their time and positioning students to better succeed in subsequent grade levels. Allowing Program funding to be used in those later grades, such as helping grade 4-6 students who are at a K-3 reading level, is another opportunity that can help increase overall literacy statewide. Using professional development opportunities to create a more unified approach to literacy instruction statewide is another.

In sum, while there are several areas that the current Program does well, there remain areas for improvement. The qualitative approach of this year’s evaluation provides an opportunity to engage with how various Program elements are perceived and dealt with in the classroom, while prior years’ evaluations provide a more quantitative assessment of the Program. Both approaches, together, will provide the most accurate picture.

Regions

Education regions in the state were defined as follows:

- Region 1 Benewah, Bonner, Boundary, Kootenai & Shoshone counties

- Region 2 Clearwater, Idaho, Latah, Lewis &, Nez Perce counties

- Region 3 Ada, Adams, Boise, Canyon, Gem, Payette, Valley, Washington, partial Elmore & partial Owyhee counties

- Region 4 Blaine, Camas, Cassia, Gooding, Jerome, Lincoln, Minidoka, Twin Falls, partial Elmore & partial Owyhee counties

- Region 5 Bannock, Bear Lake, Caribou, Franklin, Oneida, Power & partial Bingham counties

- Region 6 Bonneville, Butte, Custer, Clark, Fremont, Jefferson, Lemhi, Madison, Teton & partial Bingham counties

Teacher Survey

IPI developed and administered an online survey of K-3 teachers using the Qualtrics platform. The survey was in the field from November 4th, 2020 through November 20th, 2020. In order to reach as many K-3 teachers in Idaho as possible, IPI worked with staff at the Idaho State Department of Education (SDE) to facilitate distribution of an anonymous survey link to teachers with instructions on how to participate.

There were 494 teacher responses with usable data from 71 different LEAs from every region in the state. A summary of respondent characteristics follows:

- By Grade Level

- 21% Kindergarten teachers (105)

- 29% 1st grade teachers (140)

- 22% 2nd grade teachers (106)

- 21% 3rd grade teachers (105)

- 3% Multi-grade teachers (15)

- By Region

- Region 1: 5% (23)

- Region 2: 6% (28)

- Region 3: 42% (208)

- Region 4: 21% (101)

- Region 5: 13% (62)

- Region 6: 12% (59)

- Virtual Schools: 1% (5)

- N/A: <1% (3)

- By School Type

- Traditional Public: 85% (341)

- Brick and Mortar Charter: 14% (56)

- Virtual Charter: 1% (6)

- On average, teachers have been teaching in Idaho for 12 years (sd of 8.5)

- On average, teachers have been teaching in their current grade for 7 years (sd 6.6)

- Only 10% are new to their current grade

- No patterns were found between any demographic and literacy-focused data.

Administrator Survey

IPI developed and administered an online survey of LEA literacy program administrators using the Qualtrics platform. The survey was in the field concurrently with the teacher survey from November 4th, 2020 through November 20th, 2020. In order to reach as many administrators in Idaho as possible, IPI worked with staff at the Idaho Office of the State Board of Education (OSBE) to facilitate distribution of an anonymous survey link to all literacy plan contacts with instructions on how to participate.

There were 101 administrator responses with usable data from 72 different LEAs from every region in the state.

Summary of respondents by region:

- Region 1: 8% (8)

- Region 2: 9% (9)

- Region 3: 27% (27)

- Region 4: 14% (14)

- Region 5: 27% (28)

- Region 6: 14% (14)

- Virtual Schools: 1% (1)

A summary of positions respondents held include:

- 18% Administrators (general)

- 5% Curriculum Coordinators

- 3% Directors of Accountability

- 9% Federal Programs Specialists

- 5% Instructional Specialist

- 4% Literacy Coordinators

- 33% Principal or Assistant Principals

- 3% Reading Specialists

- 21% Superintendents of Assistant Superintendents

Teacher Interviews

At the end of the Teacher Survey, respondents were asked if they would be willing to participate in a focus group session about teaching literacy in Idaho. Those that indicated “Yes” or “I would like more information” were asked to provide an email address so that IPI researchers could contact them.

Researchers collected addresses for 127 prospective participants. An incorrect setting during the first two hours of the survey prevented respondents from seeing the email address question. As a result, IPI was not able to collect email addresses from approximately 30-40 respondents that otherwise may have been willing to participate.

Participants were sorted according to education region of the state. At least one Zoom session was scheduled for each education region. Nine sessions were scheduled in all, during the period from November 20th, 2020 to December 1st, 2020. Despite best efforts, not all regions were represented in the interviews due to scheduling conflicts.

In total, 11 K-3 teachers participated in interviews across the various sessions.

Secondary Data

In addition to collecting new original data, IPI obtained individual LEA Literacy Intervention Plans from OSBE, which were then coded and entered into a dataset. LEA-level Literacy Intervention Expenditure Report data was also obtained from SDE and entered into the same dataset.